The global AI landscape is evolving rapidly, with the US, China and the UK leading the way in AI model development, ahead of the rest of Europe. When it comes to GenAI, many experts describe the current environment as a “move fast and break things” era, with risks that safety considerations are taking a backseat. In a new report, BNP Paribas Equity Research analysts reflect on the current state of play and examine the investor response to this race to Artificial General Intelligence (AGI).

To do this, our analysts review the responsible AI strategies of 15 asset managers to identify common themes, points of divergence, and opportunities for growth. They find that AI is widely perceived as a promising investment opportunity for sustainability-minded investors, with real benefits observed across various sectors. To grow their knowledge on the theme investors are starting to differentiate between AI developers and deployers, actively develop a comprehensive understanding of AI-related risks, and are seeking best practices whilst increasing their exposure to the sector.

❝ We are in a ‘move fast and break things’ era when it comes to genAI. With safety considerations taking a backseat, investor engagement will become even more important. ❞

Attention shifts to deployers as adoption accelerates

AI is seen as an exciting investment opportunity by investors, rather than an overhyped trend. GenAI is already delivering real efficiencies in many industries including healthcare and life science, financial services, telecommunications, consumer/retail, and manufacturing.

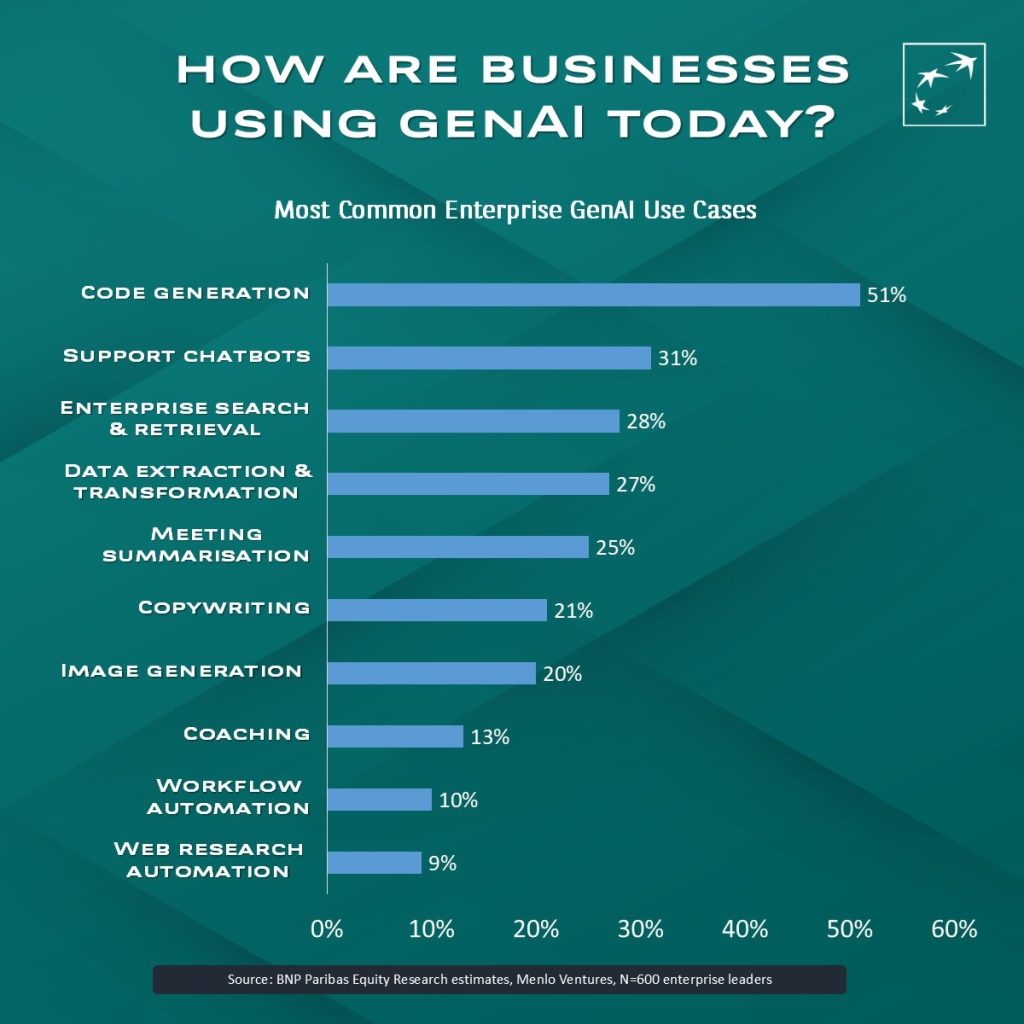

Several examples of how responsible AI is used by companies are provided in the report. Obvious examples include code generation, which is the most common use case, as well as support chatbots, data extraction, meeting summarisation, and image generation.

Responsible AI for the greater good: smart solutions for a sustainable future

ESG investors are particularly interested in companies that promise to use AI to improve healthcare and support the energy transition. AI has the potential to transform how we diagnose patients, and it could be used to improve access and affordability of healthcare in developing countries. GenAI could also help improve our understanding of climate change, with weather prediction models providing scientists with a deeper understanding of extreme weather and allowing for better preparedness. In the future, AI could also play an increasing role in the energy transition, through speeding up research and deployment into technologies such as small modular nuclear reactors, nuclear fusion, clean energy solutions like CCUS and SAF, electric vehicle charging, and sustainable materials.

Growing interest in responsible AI from ESG investors

Alongside rising interest, investors are also in the process of developing a greater understanding of AI-related risks, which ESG investors believe will become increasingly material.

In their Responsible AI investor framework, BNP Paribas Equity Research analysts split AI-related risks into two groups: i) societal harms from AI from existing model capabilities, and ii) catastrophic risks that could arise when models become more capable. The former include discrimination in decision-making, harmful content, misinformation, data privacy, competition concerns, unemployment, cyber security and environmental impacts. Catastrophic risks cover the potential for AI to be used by malicious actors, or for models to escape human control.

When assessing the responsible AI strategies of 15 asset managers, only a few frameworks place significant emphasis on catastrophic risks such as the loss of human control of AI, with more frameworks targeting immediate societal risks like bias. It is rare for frameworks to detail responsible AI materiality by sector and instead investors tend to concentrate their efforts on the most exposed or at-risk companies.

MIT’s AI Incident Tracker – a database that classifies incidents based on risks and harm severity – shows that the proportion of reported cases related to misinformation and malicious use has grown every year since 2020. In 2024, these risks represented more than half of all incidents reported. This illustrates a landscape increasingly shadowed by misuse.

Investors are also looking for clearer signs from companies on how AI is being developed and deployed. As such, they are asking for more standardised data and disclosures and improved transparency overall. Companies are therefore likely to come under increasing pressure to demonstrate that their AI systems are safe, fair, unbiased, accurate and beneficial.

When it comes to engagement with companies, investors are prioritising issues such as cybersecurity, resource consumption of data centres, and AI corporate governance, especially at board level. There are also further challenges ahead that our analysts expect investors could focus on in future, such as re-training and re-skilling the workforce, and sector-specific benefits and risks.

Navigating AI regulation

The regulatory landscape for AI is complex, with concerns rising both sides of the Atlantic that too much regulation could stifle innovation. As a result, some regulators have started to review and adapt their policies. In the US for example, the Trump administration has repealed Biden-era safety-focused AI policies in favour of deregulation and innovation, and launched initiatives like Project Stargate to bolster AI infrastructure. In the UK, the regulatory debate is still ongoing with some alignment towards the US model.

In the EU, the EU AI Act is already law, and requirements have begun to phase in from earlier this year. This regulation is intended to become a global benchmark for AI regulation. Key provisions for general-purpose AI models are expected to take effect at the beginning of August 2025, despite pushback from US big tech and European companies. Meanwhile, China is advancing its regulatory framework with new rules on labelling AI-generated content and securing training datasets.

With a renewed sense of urgency to develop the most powerful model, safety considerations are taking a backseat and risk mitigation efforts are struggling to keep up. Without sufficient regulation, investor engagement on responsible AI will become even more important.

For more details on BNP Paribas Exane’s research, please visit:

Cash Equities | Global Markets

BNP Paribas does not consider this content to be “Research” as defined under the MiFID II unbundling rules. If you are subject to inducement and unbundling rules, you should consider making your own assessment as to the characterisation of this content. Legal notice for marketing documents, referencing to whom this communication is directed.